Smoke vs Sanity Testing: When and Why to Use Each

A few releases ago I watched an urgent hot-fix stall for forty minutes because no one could answer a simple question: “Is the build even alive?“

That moment crystallized the real value of fast health checks. Full regression suites are wonderful, but they take time the business rarely has. To keep delivery flowing, teams rely on two safety nets: smoke and sanity testing.

Below we explore how the two differ, which pains they solve for CTOs, QA leaders, and product managers, and why handing most of the grunt work to an AI engine is often the smartest move.

Why “Quick” Tests Matter

- Release velocity keeps climbing. Frequent deployments demand feedback in minutes, not hours.

- Downtime is expensive. A payment outage sends users straight to competitors.

- Teams stay focused on new features. Engineers would rather build features than patch brittle scripts.

Smoke and sanity tests strike the right balance: short, clear, and easy to automate with AI agents that write and run tests. The trick is knowing where one ends and the other begins.

What Is Smoke Testing?

Smoke testing is a handful of baseline scenarios proving an application starts and performs its most critical moves. “Does it even breathe?” is the question you ask.

Key traits

- Covers the “skeleton” of the product, not the details

- Runs immediately after a build or deployment

- Blocks further testing if it fails

- Executes in minutes, not hours

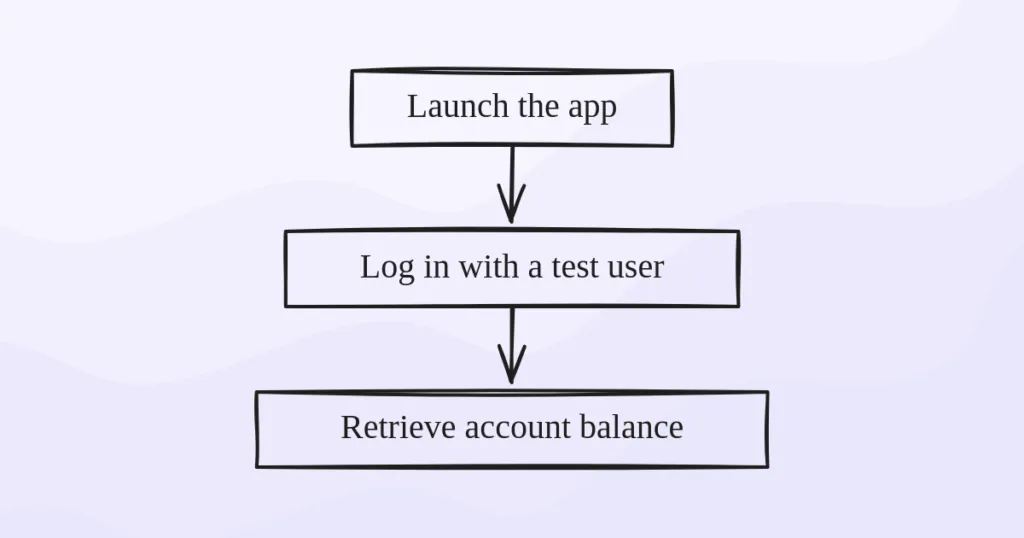

Real-world snapshot

A mobile-banking team ships a nightly build. Their smoke run is three steps:

- Launch the app

- Log in with a test user

- Retrieve account balance

Any failure paints the build red, and CI refuses to pass it to QA. Why? Burning compute on a full regression is pointless if login is broken by a bad certificate.

What Is Sanity Testing?

Sanity testing is a quick “logic check” which targets a narrow slice of functionality after a small code change or hot-fix. It answers, “Did we fix what we meant to—and did we avoid collateral damage?”

Key traits

- Focuses on the module or flow touched by the change

- Runs on a stable build that has already passed smoke and base regression

- Aims to confirm the fix and nearby essentials still work

- Similar runtime to smoke, but digs deeper in one area

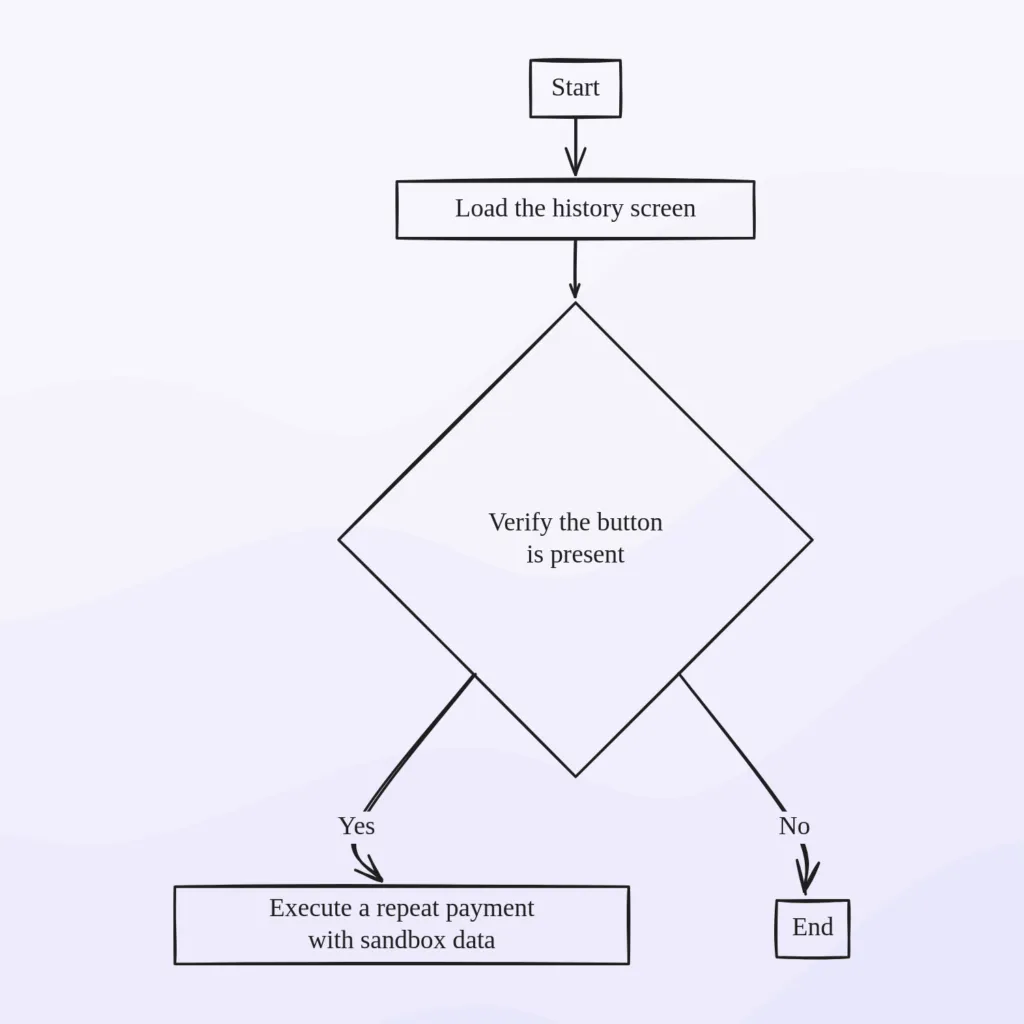

Real-world snapshot

In that same banking app, testers notice the “Repeat payment” button vanished from transaction history. A developer patches the UI layer and triggers a sanity set:

- Load the history screen

- Verify the button is present

- Execute a repeat payment with sandbox data

A full regression would waste hours; the app and infra are unchanged. Sanity proves the button is back and the payment API remains untouched.

Smoke vs Sanity at a Glance

- Purpose - Smoke ensures the build is viable; sanity verifies a specific change.

- Scope - Smoke is minimal yet broad; sanity is narrow but deep.

- Timing - Smoke fires right after every build; sanity fires after a hot-fix or flag flip.

- Pipeline effect - Smoke failure halts the train; sanity failure rolls back only the patch.

These distinctions matter to a CTO allocating infrastructure minutes and to a QA lead triaging limited tester hours.

When to Choose Smoke Tests

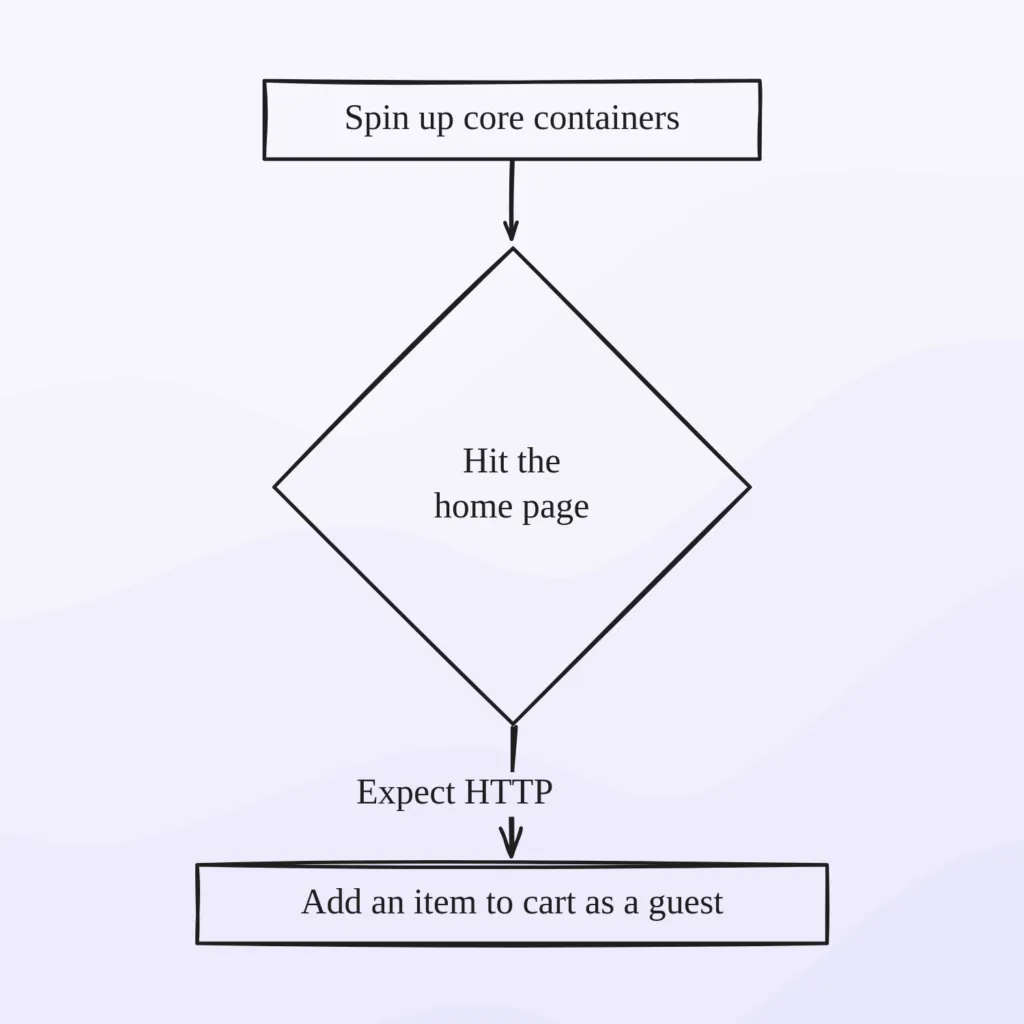

Nightly build gates

An e-commerce platform merges dozens of branches each night. At dawn the product team wants a green light before reviewing new features. Smoke steps:

- Spin up core containers

- Hit the home page and expect HTTP 200

- Add an item to cart as a guest

Passing builds graduate to staging; regression and load tests start only then—saving compute and keeping the nightly window intact.

Full environment migrations

Moving micro-services to a new cloud? A smoke check verifies services are up, databases wired, message queues consuming. Without that first “breath test,” the migration could lack any health metric until it is too late.

When to Choose Sanity Tests

Critical production bug-fix

A CRM export drops the “Total” column. After a quick SQL correction, the sanity set:

- Generate yesterday’s report

- Export to CSV

- Confirm the Total column exists

No smoke run required—the build is healthy; accuracy of reports is the risk.

Feature-flag validation

DevOps flips on a new discount algorithm. Sanity investigates only the affected flow:

- Create a cart

- Apply a promo code

- Verify the final charge

Running the whole regression suite would hammer dozens of untouched modules; sanity clears the flag in under a minute.

Five Best Practices for Automating “Fast” Tests

- Keep the suites separate. Smoke stays tiny; sanity stays flexible.

- Let QA.tech own the boilerplate. An adaptive engine learns UI changes and refreshes tests automatically. Smoke always hits the product’s “aorta,” sanity zeroes in on changed components.

- Wire both stages into CI/CD. Smoke is the first gate; sanity triggers on hot-fix branches or flag toggles.

- Watch runtime. If smoke creeps past five minutes, reevaluate—maybe you built a mini-regression by accident.

- Delete fearlessly. When a scenario loses relevance, let the AI craft a new one; maintaining dead code costs more than replacing it.

Where QA.tech AI Speeds Up the Process

Traditional smoke and sanity tests break when UI changes. A button moves, and your “Add to Cart” script fails not because the feature is broken, but because the test can’t adapt.

QA.tech’s AI agents solve this by understanding intent, not just elements. When our agent sees “Add item to cart,” it reasons about the user’s goal and adapts to UI changes automatically. Your smoke tests stay green through redesigns, and sanity checks focus on actual functionality—not brittle selectors.

The result? Smoke tests that run reliably after every deployment, and sanity checks that give you confidence in your hot-fixes without the maintenance overhead of traditional E2E scripts.

Wrapping It Up

Smoke tests answer, “Is the system alive?”

Sanity tests answer, “Did we fix the right thing without breaking neighbors?”

By separating these goals, you shorten test cycles, cut technical debt, and ship with greater confidence.

Stay in touch for developer articles, AI news, release notes, and behind-the-scenes stories.