The Ultimate Beginner’s Guide to QA Testing

Did you know that it’s 6 times more costly to fix a bug in production than it is to prevent it? If your web app has bugs in it, users tend to jump ship, devs will spend more time fixing issues than pushing new features, and customers likely won’t convert because they don’t trust your app.

Luckily for you, this guide will focus on the QA testing lifecycle, the importance of quality assurance in software development, and automated QA tools. I’ll also tackle major types of QA testing and best practices to implement. Finally, I’ll share how you can use AI agents like QA.tech to speed up testing and reduce costs.

What Is QA Testing?

In software development, QA testing is a continuous process that ensures an app meets required quality standards and user expectations across builds. Basically, its role is to find and remove bugs before the app is live. At the same time, the role of testers is to check that software can run smoothly and deliver optimal user experience.

Quality assurance helps teams reduce the risk of shipping new features. Every time there’s a change to your codebase, some processes and user flows may break. You need a way to monitor and test the app to ensure that it always meets usability needs.

Testing is also tied to user retention and revenue. Simply put, when users enjoy your software, they stick around longer. In fact, 88% of users abandon an app if they encounter bugs. QA accelerates release cycles by minimizing the bugs that reach production. This also eliminates the costs and time that would have been spent solving additional issues.

All in all, proper QA testing ensures that you don't ship bugs that keep your devs stuck in a perpetual cycle of fixing problems.

QA Testing vs. Software Testing: What’s the Difference?

Even though QA testing and software testing are often used interchangeably, they are not the same. While the former is focused on user experience, the latter checks isolated parts of an app.

For instance, QA assesses whether a user flow runs with zero bugs or if software works smoothly during heavy traffic. On the other hand, software testing is about making sure that a function only accepts certain input types and returns the expected output.

In short, software testing is only a part of QA, which also includes testing an app’s performance and security.

What Is the QA Testing Lifecycle?

The QA testing lifecycle is a standard step-by-step process teams follow to test web apps fully. Ideally, the lifecycle starts before coding begins and continues after the software has been deployed.

Although all the stages are important and none should be skipped, the actual time you’ll spend on each one varies depending on software. Below, you’ll find the main stages of the QA testing lifecycle.

Requirement Analysis

At this stage, QA and product teams discuss what the app objectives are and what a great user experience should look like. It’s a back-and-forth process where QA reviews the software requirements document to note testing risks, dependencies, and areas to clarify. The goal is to ensure all team members are on the same page regarding the software’s intended user outcomes and key testing priorities.

The requirement analysis happens at the beginning of the software development lifecycle before the first line of code.

Test Planning

During test planning, the QA team works alone to define test scopes. Here, they identify the stages of the SDLC they want to test (ideally all), testing strategies, timelines, and dependencies.

Test planning is an important step because it uncovers the kind of targeted test cases you need to create. Without a detailed plan, your team may be stuck developing random tests that are not very useful. This stage also helps you prioritize certain tests above others.

Test Case Development

At this point, testers create test cases for specific user flows. Each test includes a description, steps, and expected inputs and outputs.

If you want the best results, you should develop multiple tests for the same flow. Take booking a meeting, for instance. There can be multiple test cases covering when both a title and a time stamp are present, when only a time stamp is included, and when a user tries to book a meeting at an occupied time.

In order to develop test cases with wide coverage, you have to think carefully about all the ways things can go wrong. However, you can also make it easier by using an AI agent that automatically generates tests for different scenarios.

How to write a good test case?

- Use a descriptive test case title: It should explain both the test and expected results in one line. For instance: "Auth - Confirm incorrect password returns invalid login error." That is: [USER FLOW] - Confirm [ACTION] returns [RESULT].

- Briefly state how to perform the test: Be concise and avoid unnecessary steps.

- Include all the essential info: Make sure you state expected inputs and outputs. If login is required, include the test login info.

- State how to define test case status: For instance, it can be defined as pass, fail, or pending.

- Make your test case atomic: It should test only one functionality or one user flow.

- Make your test case reusable: Write test cases that can be used for multiple builds.

Test Execution

This is when the QA team finally runs the test cases prepared in the previous stage. If needed, the dev team sets up the required environments and loads test databases and data. Usually, test environments mimic the production environment closely.

During this stage, the QA team also logs the results of all test cases.

Reporting and Documentation

Finally, the QA formally documents testing results and the overall pass rate. They report the number of test cases and deliver insights on whether more tests should be run or if the software is ready to deploy.

The most important part of this step is creating comprehensive bug reports for any issues you run into.

How to write a good bug report?

- Write a descriptive title that states the problem and location: For instance, “Unable to save a favorite item in the product page.”

- Include a brief description, if necessary: An effective title may be enough.

- Always state the environment the bug showed up in: Mention whether it happened in Firefox or when using 3G, for example.

- Include steps to reproduce the bug: Make sure not to include the ones that are not essential. Be concise.

- Add the expected result and actual result: That means, what the outcome should have been vs. what it actually was.

- Add a screenshot or video recording: It’s important to attach a visual proof of the bug so that devs can see how the error actually happened.

- Include bug priority: Finally, indicate whether the bug is critical or low severity.

Is Agentic AI Important in QA Testing?

After the rise of AI coding tools like Claude, Cursor, and Copilot, dev teams pushed builds at record speed. However, that only led to more features to test and more bugs to fish out. This, in turn, meant more QA pipelines than most teams were prepared for. Enter AI agents.

QA agents are independent AI processes that take charge of testing flows, including planning, test case development, execution, and documentation. While AI tools can be prompted to perform a single action, like generating a test case or running a script, AI agents autonomously make decisions about test cases, test environments, and bug priority.

Adding an AI agent like QA.tech into your team’s testing workflow offloads a significant amount of manual labor and brings a range of valuable features and benefits. It enables instant test case generation by scanning your app’s UI to identify features and generate test cases in seconds. It also supports easy implementation of exploratory testing, as it takes just a few minutes to crawl your site and uncover test cases that may have been overlooked. Plus, with the AI agent being capable of completing entire testing processes independently, your QA team can be free to focus on more advanced testing flows.

Additionally, AI agents like QA.tech offer automatic test result documentation, as they generate detailed bug reports instantly, often complete with screen recordings and meaningful insights, into test results. They also help teams maintain accelerated testing lifecycles by keeping up with fast-paced development demands. Finally, AI agents support self-healing testing processes, which means they can automatically update test scripts in response to UI changes. That way, you can rest assured that the test cases are reusable across deployments.

Hands-on AI QA Testing Project

Let’s see how you can integrate your web app into QA.tech in minutes. This tutorial uses a demo meeting booking app, which you can find here.

How to Add a Project and Generate Test Cases in QA.tech

- First, click “Create Project” at the top of the dashboard.

- Next, give your project a name and enter the URL. For pre-deployment tests, enter the staging URL; for post-deployment tests, enter the live URL. Click “Continue.”

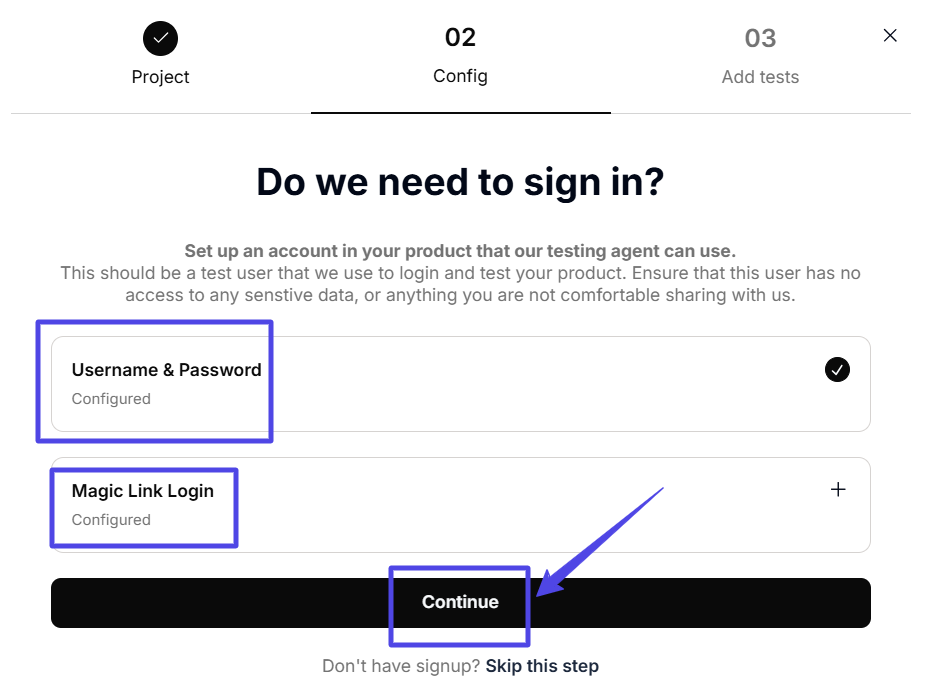

- Once the project has been added, enter test login details, if necessary. Click “Save” and then “Continue.”

- This is where the magic happens. The AI agent automatically scans your web app to generate test cases. You can then test those by clicking “Run tests.”

- Tap on “Results” on the left side to see the recently run tests. The AI model has generated both positive and negative test cases to cover one user flow, the login.

- Any time you want to run more tests, click “Test Cases” in the side menu, select “Add Test Case,” and then “Analyze My Site.” The AI agent runs an audit to discover scenarios to test.

.png)

How to Send an API Request in QA.tech

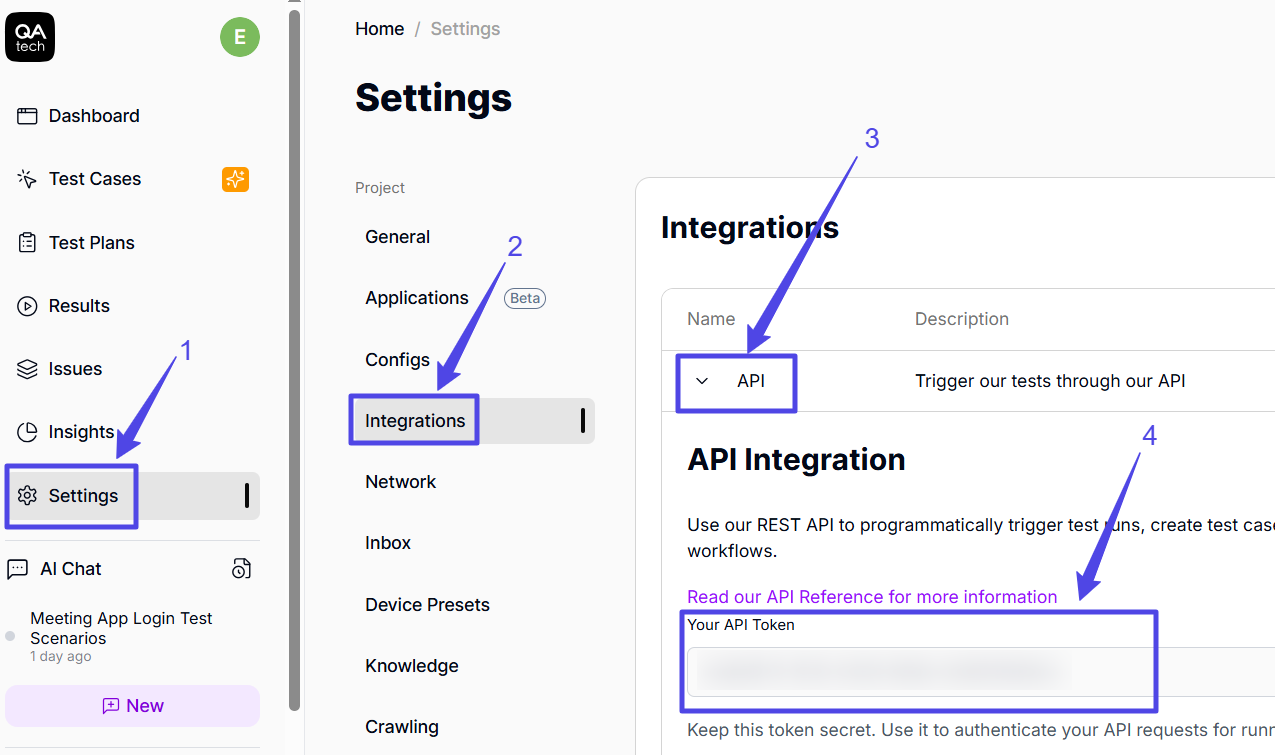

To send a request, you have to get a Bearer token for authentication.

Click “Settings” in the side menu and select “Integrations.” Then click “API” and copy your secret token.

You can use it to make direct cURL requests to run or create new test cases. You can also integrate it with CI/CD pipelines in GitHub or send issue tickets to Linear or Jira. Interesting, right?

Find out more in the QA.tech docs.

What Are the Types of QA Testing?

Even though there are over a dozen testing types, these are the four major ones:

Unit Testing

Unit testing is used for checking individual code components, like single functions and classes, to ensure that they return the right values each time. For instance, you can unit test a function that adds a new meeting link to a calendar app. The test would check whether it accepts the required input type and only adds the link in an empty time block.

These tests are typically done in early phases of software development. They are particularly important because they minimize bugs and allow for better QA test coverage.

Integration Testing

Integration testing involves combining multiple components to test how they work together: for example, testing whether a frontend component successfully displays a response from the backend.

This type of testing uncovers compatibility issues early on, which is crucial because two individual functions can work well on their own but have issues communicating with each other.

End-to-End Testing

End-to-end testing tracks entire user journeys to see whether all app components work seamlessly together in order to complete user flows. An example of this would be ensuring a user can log in to their calendar app to add a new meeting.

E2E tests are performed much later in the SDLC, and they give your team a clear idea of how users experience your software.

Manual and Automated Testing

Manual tests involve humans writing test cases and executing them without any tools. Automated tests, on the other hand, involve running test scripts to uncover flaws in the software.

While manual tests catch visual bugs that are difficult to script, automated tests are great for eliminating human bias and running repetitive tests, especially as part of build pipelines. That is why it’s important that you run both.

QA Testing Tools

There’s a wide array of tools that QA teams use to automate testing web apps. Some are more important for usability tests, while others do better for unit and integration ones.

QA.tech is an AI agent that scans your web app to generate E2E test cases. It automatically discovers scenarios, generates and executes tests, and returns detailed insights and bug reports if needed. One of its major features is detecting changes in your web app and generating new test cases accordingly. It also integrates seamlessly with GitHub and GitLab CI/CD pipelines, which enables it to review pull requests and monitor code pushes.

QA.tech goes beyond basic automation by running exploratory tests to uncover hidden bugs and edge cases. Each test is accompanied by video recordings and other detailed insights. Additionally, the platform offers an API that allows teams to create and run tests and sync support tickets directly with tools like Jira and Linear.

Cypress is a browser-based automated JavaScript testing framework that tests UI components. It also supports integration and end-to-end tests for web applications. Furthermore, Cypress connects with versioning software like GitHub and GitLab.

Playwright is an open-source test automation tool that is also used for E2E testing. Even though it’s Node.js-based, Playwright supports multiple programming languages, including C#, Python, and Java. It offers multi-browser support and both headless and headed testing modes.

Selenium is an open-source framework for E2E testing of frontend web components. It offers features like test parallelization, playback, bug report generation, and CI/CD integration. Like many options here, it supports testing on several browsers.

No-code tools allow for testing web components without the need to write a single script. Testers use drag-and-drop, pre-defined commands, record, and replay to test components. Though these tools allow for fast testing cycles and very low test case maintenance, their features are limited. Some of the popular options include Mabl, Bugbug, and Appium.

Top 7 QA Testing Practices

QA testing strategies vary across teams and projects, but there are some core principles you should keep in mind. If you follow these guidelines, you’ll deliver the best version of your software to users no matter how lean or robust your QA processes are.

- Use continuous testing: You need to run repetitive automated tests before and after a deployment. Continuous testing is important for maintaining high usability even after you’ve updated a feature or pushed a new one.

- Perform exploratory tests: No matter how many test cases you have, some bugs might slip through the cracks. Exploratory tests are for discovering hidden issues that automated tests may have missed. Who knows, these random bugs may be the reason users aren’t converting.

- Test in multiple environments: Unsurprisingly, different environments render UI components differently. For instance, a component that is clickable in Chrome may be unresponsive in Firefox or too big on Mac. Also, some users may access your site during heavy traffic or while using slow connections. Multiple environments give you a better idea of how your software performs in various scenarios.

- Focus on usability testing: Your users come first, so every part of your app needs to work as seamlessly as possible. Even something as simple as returning a message for a pending feature can have a noticeable impact on user retention.

- Maintain clear and constant communication: The QA team should be in constant communication with the dev team and prioritize bugs that need to be fixed. They should also create bug tickets promptly and move them when solved. That way, QA and dev can work together to release reliable software.

- Use AI to speed up testing processes: AI agents simplify QA by independently generating and running tests. This can save you tons of hours that you would otherwise spend manually coding test scripts and drafting bug reports. Even better, AI agents automatically detect changes in your code to generate new tests and rerun existing ones to ensure continuous testing.

- Write quality test cases: Make sure that each test case is atomic, clear, and concise, and that it tests only one feature.

Final Word

QA testing is an indispensable part of the software release lifecycle. As such, it can take up plenty of resources, especially when there are lots of variables to deal with.

Luckily, you can streamline your testing process using QA.tech, an AI agent that scans your app and automatically generates, executes, and reports tests. Find out how to set it up in minutes and start pushing updates with confidence.

Stay in touch for developer articles, AI news, release notes, and behind-the-scenes stories.